Automated Accessibility Testing: From Passive to Preventative

Implementing and scaling automated accessibility testing on REI.com

At REI, we believe that time outside is a fundamental right that all should be able to enjoy. This commitment to inclusion doesn’t start and end with marketing. It’s something we try and live up to every day as we design and engineer new customer features and experiences on REI.com.

Back in 2021, like many other companies, we were stuck in an endless cycle of audit & remediate with our digital accessibility efforts — reacting after-the-fact to the barriers we were putting in front of our customers. Recognizing a need to act sooner, we set out to engineer an automated solution that would be:

- Effective — limited in its false positives.

- Scalable — easily translatable to any microsite environment within our common framework.

- Passive — require minimal human intervention to sustain.

- Blocking — not only advising on accessibility issues but preventing them from impacting people in the first place.

Now two years later, we’ve successfully built and scaled a suite of automated tests that are consumed by over 90% of our customer-facing applications, testing thousands of deployments per year for common accessibility missteps, and blocking inaccessible code from seeing the light of day.

We know automation is only 30% of the accessibility testing puzzle, because not everything can be automated today, but we’re proud of the foundation we’ve built and the lessons we’ve learned along the way.

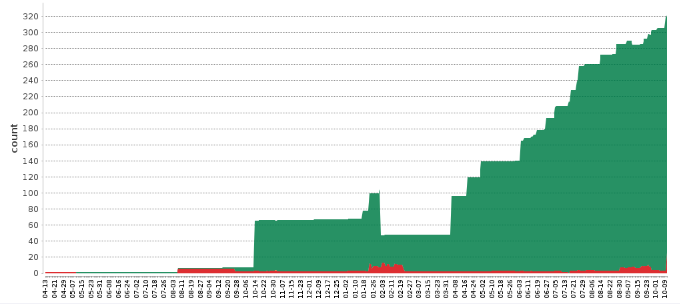

Figure 1: Accessibility Test Count - April '22 to Today

The graph above shows the benefits of integrating accessibility tests into a common framework, instead of relying on a team-by-team approach — rapid adoption. In October 2022, we were running less than 20 tests per day. Today, we're consistently over 300 with a greater than 80% pass rate.

Building for Scale

From the beginning, our most important goal was enabling scalability. In talking to individual employees, we learned that accessibility testing often feels intrusive and additive to the day-to-day work of product teams. We wanted a solution that could be a seamless part of the product development process. Our test suite works by minimizing friction and maximizing positive outcomes for both employees and our customers.

Another reason that scalability was important is due to the rate of change happening on REI.com. With the decomposition of our code monolith into individual microsites and services underway, and due to the nature of our continuous delivery model, new applications are popping up all the time. It’s hard for our small accessibility team to keep up with the maintenance work.

Luckily, many of the customer-facing web applications are built on our in-house framework, Crampon. A common framework allows us to take advantage of pre-existing templating, baking in accessibility best practices and testing infrastructure from day one. It also means that as we started our automated testing journey in 2021, our existing web applications were backwards compatible with our new ideas.

To put in perspective just how easy it is now for developers to implement basic accessibility testing in their application today, I’ve included the steps below for a new or existing Crampon application:

For new applications:

- A new application is created via Chairlift, our internal application provisioning service.

- The developer gives the application a name and selects Crampon Application to create a Crampon-based microsite or microservice.

- The developer chooses Includes microsite UI

- After completing the remaining steps in the set-up wizard, the developer now has a repository with our starter application that includes the latest version of Crampon, which includes an accessibility testing starter template by default.

- All that’s left to do is specify the URLs to test and implement the

isCoOpA11yCompliantmethod into their existing Selenium-based system tests. These can be written to support tests for basic static content or more advanced applications can include dynamic page states and conditions.

For existing applications:

- A new change to the test suite has been introduced in the Crampon repository.

- A new version of Crampon has been released.

- Existing microsites upgrade their applications to the newest version of Crampon.

- All that is left to do is specify the URLs to test and implement the

isCoOpA11yCompliantmethod into their existing Selenium-based system tests. These can be written to support tests for basic static content or more advanced applications can include dynamic page states and conditions.

As you can see, we have taken much of the work of understanding accessibility and test libraries out of the equation and let test engineers do what they do best, write tests.

Ensuring effectiveness

With scale comes challenges related to the effectiveness of our test suite. A one-size-fits-all solution isn’t tremendously effective, especially with 22 unique applications serving customer functions from landing page to checkout. Not to mention how diverse our product teams are in their approaches to writing acceptance criteria, code, and tests.

Our first step was to decide on a base test library that could limit false positives while remaining flexible enough to conform to each unique application in a way that was seamless and performant. After extensive testing, we landed on axe-core. It’s free, open source, something we were familiar with already, and allowed us to add/remove rules and standards easily.

Then, after careful consideration, we narrowed down our current ruleset to just 42 rules. We intentionally exclude page elements that are included as part of our base template to eliminate test noise and ensure our suite is performant.

With a solid ruleset in place, our next move was to exclude the header and footer navigation for testing and only test content within the core page content for each application. Our navigation is persistent across many pages, and we found that choosing to test this code once in isolation, compared to testing it within every application removed a lot of noise caused by test failures, allowing teams to work quicker.

Accessibility tests run against our navigation (header and footer), as well. Our Header/Footer exists as its own microsite, which makes testing it easier.

Finally, we needed to make it easy to make our tests blocking for deployments. We want to prevent issues from impacting customers. To do that in a way that’s durable, we needed to ensure tests are always passing, by accounting for the code we don’t own — 3rd party vendor content. Without doing this, 3rd parties could release inaccessible code and prevent our developers from pushing to production.

We solved this problem by developing and implementing a change to our test suite via Crampon that enabled developers to skip testing for certain selectors within their UI.

We rolled this out globally by working with individual teams to upgrade to the most recent version of our common framework. And with our implementation in place, teams are easily and efficiently able to understand how well their application does at passing automated accessibility tests, without the noise that navigation and vendor solutions might add.

Ushering in preventative measures

With accessibility tests running on every microsite and in many cases passing, we could have stopped there. However, we were determined to do more than tell people their code had accessibility issues.

We wanted to prevent the negative impacts of inaccessible code before it became reality.

Initially our test suite was running in production only. In theory, we could have flipped the Boolean value from false to true and prevented people from shipping code that didn’t pass the test. This would have been a bit heavy-handed and potentially added additional friction when folks were on a tight deadline or in a code-freeze situation.

Instead, we chose to implement an identical set of tests in each team’s staging environment. This acts as a proxy for blocking tests with less risk to deployments since teams test their work in staging before pushing to production. So far, this has been effective in preventing regression without negatively impacting our teams’ workflows.

Road to maturity

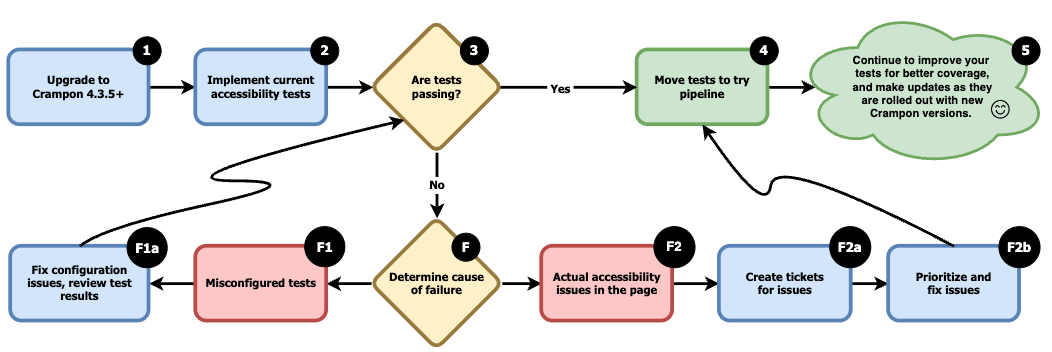

Now you’re wondering, how did you scale so quickly? and much of that success can be attributed to our maturity plan, illustrated in the graphic below by our Principal Accessibility Engineer, Harmony Hames.

Note: For the purpose of this article you can think of staging environment and try pipelines interchangeably. This is pre-production code.

Figure 2 - Illustrating how REI.com applications implemented our test suite

Getting teams to prioritize accessibility testing wasn’t easy, and it wasn’t an overnight success. The choices we made and the features (like exclude functionality) we developed were the result of a lot of trial and error. It took us almost 2 years to get to the stage we’re at now. That said, we chose to ease teams into change vs. forcing a lot at once as illustrated above:

- Upgrade to the latest version of our framework, Crampon

- Ensure you’re using the latest test method.

- Get your production tests configured correctly if they’re not.

- Get your production tests passing.

- Implement tests in your staging environment to prevent regression.

At any given time, you’d have 2 teams in stage one, another few with passing tests, and a handful of others not on the latest version of Crampon. It felt like chaos but having a well-documented plan for a team to mature their practice and only 1-2 things to focus on at a time, provided just the right amount of motivation for teams to achieve what looked impossible.

Finding and closing the gaps

The tests are running on most user experiences, passing, and they’re blocking. We’re done, right? Not quite.

Though the reality of the situation was that we’re running over 400 tests, split between staging and production, and most of REI.com is templated, we found that they were only as good as the templates we were covering. There were page types we weren’t covering with any accessibility tests.

To solve this problem, we needed to understand where the gaps were, and then improve each application’s tests to achieve optimal coverage. To do that, we needed to drastically expand the scope of the tests, since today our problems were outside of our existing URL set.

One, albeit cumbersome, way to determine which page types weren’t being covered, would be to browse them like a user would and run a browser extension (in our case axe DevTools, since it’s an identical test library to our pipeline tests). If there were test failures, it meant it contained failures due to rules we’d skipped, or it wasn’t tested.

Based on a set of 2,600 pages which we believed would cover all page types, we estimated this route would take an estimated 86.6 hours to complete (2 min x 2,600 pages / 60 min). This is way too long, and way too cost prohibitive, especially if we wanted a solution that would keep up with our site’s pace of change.

Arborist

Enter Arborist, our homegrown automated solution to doing the work described above. This key piece of technology unlocked several benefits for us as we reached for the best possible test coverage:

Increased visibility (9000% larger scope)

- 3rd party audits of REI.com are especially limited in scope due to cost.

- Our pipelines are testing an estimated 400 pages.

- Arborist tests around 2,600 pages.

Increased efficiency (-94% reduction in time to results)

- A human triggering the automated tests via a browser extension (axe DevTools, Google Lighthouse, etc.) would have taken an estimated 86.6 hours (2 min x 2,600 / 60 min) to complete a run of 2,600 pages.

- Arborist runs for an average of only 4 hours and 32 minutes.

Increased frequency

- A human might test pages only as time and bandwidth allows.

- Arborist runs on REI.com every Friday.

Increased flexibility

- Test libraries, people, and standards change. With an extra tool that runs outside of our deployment pipelines, we can now test new enhancements and rule sets before impacting product teams with global changes.

Since we’ve launched Arborist, we’ve been able to dial in our test coverage and improve upon the work we’d already worked with teams and applications to adopt. Additionally, we’re able to get more data, more often, something that’s been a challenge in the accessibility space.

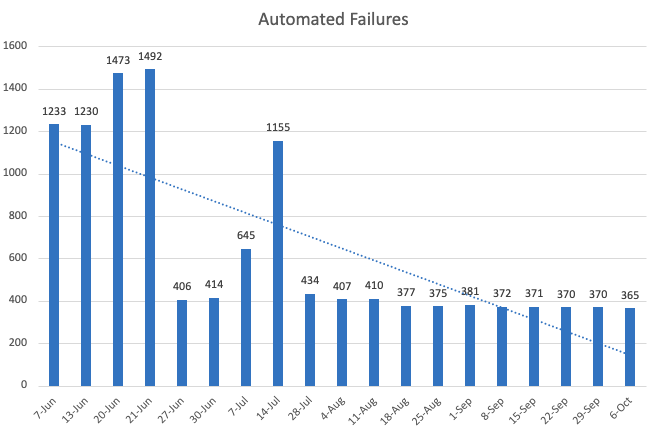

Figure 3 Each run of Arborist has shown a notable decline in automated accessibility issues, indicating we're not regressing, and highlighting what's remaining due to 3rd party vendors.

Conclusion

Though we still have a lot of work to do, knowing that automated accessibility testing can only really prevent 30-50% of issues, and that manual testing is required, too, we’re proud of the testing infrastructure we’ve built. We hope to close the remaining gaps with the manual testing standards we’re piloting now.

What was once a culture of annual audits and remediation, is now one that regularly checks in on whether their accessibility tests are passing. We’re actively preventing accessibility issues from reaching customers, leading to a more accessible and friendly shopping experience.

Putting people over profit, so all people can enjoy time outside.