Infrastructure As Code

When I came from the REI Network Engineering team to join our Cloud Shared Services team, I was excited to find all the ways to automate tasks in a DevOps world. I was tasked with creating the network foundations of AWS: Building the VPCs and the network connectivity to them. It was fascinating to see how environments could be quickly built, torn down, and built again with relative ease. I learned that the keys to a successful automated rollout of infrastructure to a cloud environment are consistency and repeatability.

At REI, we primarily use three tools for deploying Infrastructure as Code to our AWS Environments: Terraform, Git, and Jenkins.

Terraform

First, we needed a way to deploy our infrastructure in a simple and repeatable way. While AWS offers CloudFormation, we instead chose Terraform for a couple reasons:

- It’s environment-agnostic, so Terraform can also be used if we use another cloud provider

- Terraform uses HCL (Hashicorp Configuration Language) which is arguably easier to use than JSON or YAML that is used with CloudFormation. Fun fact: HCL is based on UCL (universal configuration language)

For each team within REI that needs AWS resources, we deploy two accounts: one for Development and one for Production. In each account, we use a standard pattern of AWS resources: VPCs, subnets, IAM roles and policies, AWS Config rules, perimeter security (AWS WAF and GuardDuty), and many others. Each resource is created using Terraform modules.

To better understand the concept of Terraform modules, we’ll use the analogy of creating a camp site for multiple campers, like you would see on one of our REI Adventures Signature Camping experiences. For each camper, you’ll need a tent, cots, sleeping bags, camp chairs, dining tables, and so on. For the tent “module”, you order the specifics of the tent (what size? what season? color?). When it’s time for the camp coordinator to determine inventory for the next camp trip, they order up the same tent module with the quantity needed. If the specifics of the tent changes for next year, the module can be modified and ordered again.

We do the same for building out our AWS Infrastructure. We define modules for IAM users and roles, VPCs, GuardDuty, Budgets, and so on. Then, the Terraform code for each AWS account references each module to suit the needs of that specific account.

Git

Our Terraform code is stored in Git. Git is the ever popular open-source version control system (VCS) for storing and sharing code among multiple users. Each user can check in and check out of code in a shared environment where changes are peer reviewed before being committed to the master branch, where the official copy is held.

In Git, we create separate repositories for each Terraform module. We also create separate repositories for each AWS account (Finance Development, Finance Production, Ecommerce Development, Ecommerce Production, etc.) The account repositories reference the module repositories, selecting the necessary resources for each account.

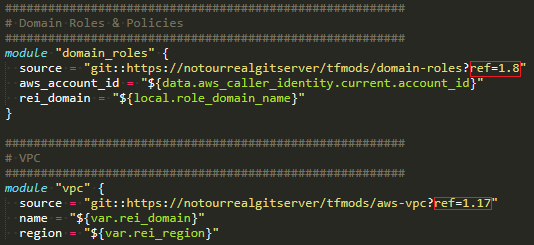

We make use of git tags in the Terraform module repositories to create different versions of each module. That way, if a module is on version 1.9 and all accounts are currently referencing that version, an update to the module can be tagged with 1.10, and all accounts are unaffected by the change. When it comes time to roll out the new version of the module, each account can be updated to point to that new version on the time frame that works best for that customer.

Example of Terraform module usage with git tags referenced in red

Jenkins

When it comes time to execute the Terraform scripts, Jenkins is used. Jenkins is an open-source Continuous Integration (CI) server that simply runs build jobs. Jobs can be run manually or can be triggered by an event, such as a push to a Git repository or a merge to a master branch.

For each of our AWS accounts, we have Jenkins “commit” and “apply” jobs: The “commit” job will validate the changes before implementation using the terraform plan command, and the “apply” job will actually execute the implementation using the terraform apply command.

All together now…

Putting it all together, here is a sample scenario using all three tools:

An engineer wants to update an account’s configuration to use the latest version of the AWS Config module. They would follow these steps:

-

Using git, they pull the latest version of the accounts’s master branch to confirm that they’re working on the latest version.

-

Next, they create and check out a branch named pr/config-update (pr being an acronym for pull request)

-

In the Terraform section that references the AWS Config module, they update the tag reference to the latest version. Then, they push the updated branch back to the Git repository.

-

Since the prefix of the branch is “pr/”, Jenkins will detect the push and will run a “commit” job that validates the changes made. Using the terraform plan command, the changes are validated without being applied.

-

Once the edits are complete and the “commit” job is successful, they create a Git pull request for peer engineers to review the change.

-

After approval of the pull request, the engineer has the ability to merge the changes to the master branch. Once merged, Jenkins will detect the merge to master and will run the “apply” job to implement the changes.

-

During the “apply” job, Jenkins will invoke a Slack bot to prompt the user to confirm the change. The user sends the “approve” command to the bot, and Jenkins will run the terraform apply command to execute the changes.

Conclusion

Using this combination of Terraform, Git, and Jenkins has given us a successful platform to deploy our Infrastructure as Code to the AWS accounts for our internal customers. The consistency and repeatability of this model assures our team that our accounts will be uniform in configuration across the organization.